|

Wednesday, May 20, 2009

David Frum has a very interesting review of How Rome Fell: Death of a Superpower. In it he touches upon two other works which address the same topic, The Fall of Rome: And the End of Civilization & The Fall of the Roman Empire: A New History of Rome and the Barbarians. I've read them both, and they are excellent histories, though as Frum notes they take different tacks. The former taking a materialist perspective, and the latter a more classical narrative of politics and government. I also agree that to some extent modern multiculturalism has fed into the revisionism which suggests that there was no decline from Classical to Late Antiquity. In From Plato to NATO: The Idea of the West and Its Opponents conservative historian David Gress actually shows how pre-multiculturalist liberal intellectuals, such as Will Durant, privileged pre-Christian antiquity, in particular Greece, and excised the entire period after the fall of Rome and before the rise of the Enlightenment (with a nod to the Renaissance) as making any substantive contribution to the liberal democratic consensus

Where you stand matters in making these sorts of judgements. For obvious reasons Catholic Christian intellectuals of what we term the medieval period did not view the ancient world as superior to their own, because whatever its material or intellectual merits, it was a fundamentally pagan one at its roots. Though the modern West is still predominantly Christian in religion, that religion no longer serves as quite the core anchor that it once did,* and material considerations as well as abstractions such as "democracy" and the "republic" are given greater weight than they once were. I believe that David Gress is right to suggest that attempts by secular liberal historians to deny the essential role of Christendom, the period between antiquity and the age of the nation-state, was driven more by politics than reality. The founders of the American republic were obviously classical educated and that influenced their outlook, as evidenced by their writings as well concrete aspects of culture such as architecture. But they were also heirs to a tradition which defended the customary rights of Englishmen, rights which go back ultimately to Anglo-Saxon tribal law. It is simply laughable to imagine that Greek democracy slept for 2,000 years and reemerged in the late 18th century in the form of the American democratic republic. But, the very same historical factors which make Western civilization what it is today also result in a set of normative presuppositions that does naturally marginalize or diminish the glory of medieval civilization set next to its classical predecessor. Also, one minor point. Frum says: ...Some scholars have speculated that the empire was depopulated by plague after 200. (William McNeill wrote a fascinating history of the global effects of disease, Plagues and Peoples, that argues for disease as a principal cause of Roman decline. In regards to the hypothesis of demographic decline due to plague, the fact that only Claudius II Gothicus died of this cause is likely a weaker point than one might think. Only one monarch died of the Black Plague, which most historians assume killed 1/4 to 1/3 of Europeans. This is probably most easily interpreted in light of the reality that the elites are relatively well fed, and might therefore have been less susceptible to disease than the populace as a whole. The connection between poor nutrition and a relatively anemic immune response to disease has been offered as one reason why deadly pandemics were much more common in the pre-modern period, when a far higher proportion of the population was nutritionally stressed. H/T Conor Friedersdorf * I think the Islamic world is a better model of how medieval Christians might view their classical pagan cultural forebears. Egyptians take pride in the antiquity of their society, but what was before Islam was jahaliya. The preservation of Greek knowledge by the Arab Muslims during the first few centuries of Islam exhibited a strong selection bias toward works of abstract philosophy. Ancient Greece's cultural production in the arts held no great interest, so it is only thanks to the Byzantines that many of those works were preserved. Labels: culture, History, intellectual history

Sunday, April 12, 2009

Less Wrong makes an analogy between Austrian economics and Calvinist presuppositionalism. I've turned off comments, so you can comment there. Especially see Robin Hanson's comment. When I expressed skepticism of rationality, this is part of what I was talking about. Deduction gets you only so far, mostly because humans are stupid and imprecise.

Labels: intellectual history

Monday, March 30, 2009

Steve brings up the fact that there is a trend in Indian culture toward memorizing stuff as a way of showing off one's intellect. This seems plausible. But, I think a bigger point might be that rote learning and feats of memory have traditionally been more important in human history than they are now, and Western societies in particular are on the cutting edge on placing more of an emphasis on creative original thinking which illustrates the ability to reconstitute concepts into a novel synthesis as opposed to regurgitating ancient forms. In fact before the printing press made books much more common there was a whole field termed the Art of Memory in the West.

Note: I would also add that memory has different utility in different fields. Physicists who I've known seem to be rather slack about memorization, but then their field is one where theory is robust enough to generate useful inferences. In some ways the rise of mathematical science is the story of the decline of memory. Labels: intellectual history

Thursday, March 19, 2009

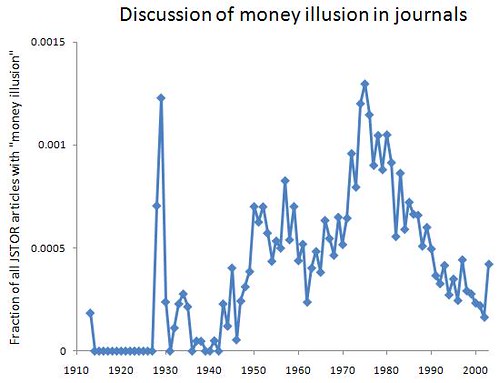

Tracking economists' consensus on money illusion, as a proxy for Keynesianism

posted by

agnostic @ 3/19/2009 02:07:00 PM

I'm probably not the only person playing catch-up on economics in order to get a better sense of what the hell is going on. Just two economists clearly called the housing bubble and predicted the financial crisis, and only one of them has several books out on the topic -- Robert Shiller, the other being Nouriel Roubini. With Nobel Prize winner George Akerlof, Shiller recently co-authored Animal Spirits, a popular audience book making the case that human psychology and behavioral biases need to be taken into account when explaining any aspect of the economy, especially when things get all fucked up. That argument would seem superflous, but economics is the butt of "assume a can-opener" jokes for a reason.

To be fair to the field, though, they point out that before roughly the 1970s, mainstream economists all believed that the foibles of human beings, as they really exist, should be incorporated into theory, rather than dismissing them as behaviors that only an irrational dupe would show. Remember that Adam Smith was a professor of moral philosophy and wrote extensively about human psychology. From what I can tell, the recent shift does not have to do with the introduction of math nerds into the field, since the man who laid most of the formal foundation -- Paul Samuelson -- was squarely in the "psychology counts" camp. It looks more like a subset of math nerds is responsible -- call them contemptuous autists, as in "What kind of idiot would engineer the brain that way?!" (Again, these are very rough impressions, and I'm winging it in categorizing people.) Of the many ideas relevant to understanding financial crises, a key one from the old school period is money illusion, or the idea that people think in terms of nominal rather than real prices. For example, if the nominal prices of things you buy go down by 20%, you won't be any better or worse off in real terms if your nominal wages also go down by 20%. However, most people don't think this way, and would see a 20% pay-cut in this context as a slap in the face, a breach of unspoken rules of fairness. This is an illusion because a dollar (or euro, or whatever) isn't a fixed unit of stuff -- what it measures changes with inflation or deflation. It's the same reason that women's clothing designers use fuzzy units of measurement -- "sizes" -- rather than units that we agree to fix forever, such as inches or centimeters. By artificially deflating the spectrum of sizes, a woman who used to wear a size 10 now wears a size 6, and she feels much better about herself, even if she has stayed the same objective size or perhaps even gotten fatter. How could they be so stupid to fall for this, when everyone knows it's a trick? Who knows, but they do. Similarly, everyone knows that inflation of prices exists, and yet the average person still falls victim to money illusion, and economic theory will just have to work that in, just as evolutionary theory must work in the presence of vestigial organs, sub-optimally designed parts, and other things that make engineers' toes curl. Animal Spirits provides an overview of the empirical research on this topic, and it looks like there's convincing evidence that people really do think this way. To take just one line of evidence, wages appear to be very resistant to moving downward, even when all sorts of other prices are declining, and interviews and surveys of employers reveal that they are afraid that wage cuts will demoralize or otherwise antagonize their employees. This is obviously a huge obstacle during an economic crisis, since firms will find it tough to hemorrhage less wealth by lowering wages -- even only by lowering them enough to match the now lower cost-of-living. The way Akerlof and Shiller present the history of the idea, it was mainstream before Milton Friedman and like-minded economists tore it down starting around 1967 and culminating by the end of the 1970s, although they hint that the idea may be seeing a rebirth. As an outsider, my first question is -- "is that true?" I searched JSTOR for "money illusion" and plotted over time the fraction of all articles in JSTOR that contain this term:  Although the term was coined earlier, the first appearance in JSTOR is a 1913 article by Irving Fisher, and the surge around 1928 - 1929 is due to commentary on his book titled Money Illusion. Academics were still talking about it somewhat through 1934, probably because the worst phase of the Great Depression spurred them to try to figure out what went wrong. The idea becomes more discussed during World War II, and especially afterwards when Keynesian thought swept throughout the academic and policy worlds within the developed countries. In the mid-'50s, the term decelerates and then declines in usage, although the policies of its believers are still in full swing. I interpret this as showing that from the end of WWII to the mid-'50s, their ideas were debated more and more, and after this point they considered the matter settled. Starting in the mid-late-1960s, though, the term begins to surge in usage to even greater heights than before, peaking in 1975, and plummeting afterward. This of course parallels the questioning of many of the ideas taken for granted during the Golden Age of American Capitalism, and the transition to Friedman-inspired thinking in academia and Thatcher-inspired thinking in public policy. Party affiliation clearly does not matter, since the mid-'40s to mid-'60s phase showed bipartisan support for Keynesian thinking, and after the mid-'70s there was also a bipartisan consensus on theory and policy applications. I interpret this second rise and fall as a re-ignited debate that was then considered a resolved matter -- only this time with the opposite conclusion as before, i.e. that "everyone knows" now that money illusion is irrational and therefore doesn't exist. The data end in 2003, since there's typically a five-year lag between the publication date of an article and its appearance in JSTOR. So, unfortunately I can't use this method to confirm or disconfirm Akerlof and Shiller's hints that the idea might be on its way to becoming mainstream in the near future. Whatever the empirical status of money illusion turns out to be -- and it does look like it's real -- the bigger question is whether or not economists will return to a serious, empirical consideration of psychology -- both the universal features (however seemingly irrational), as well as the individual differences that allow Milton Friedman to easily work through a 10-step-long chain of backwards induction, but not a typical working class person, who isn't smart enough to get into college (and these days, that's saying a lot). If all the positive press, not to mention book deals, that Shiller is getting are any sign, the forecast looks optimistic. Labels: Economics, intellectual history, politics, Psychology

Tuesday, November 18, 2008

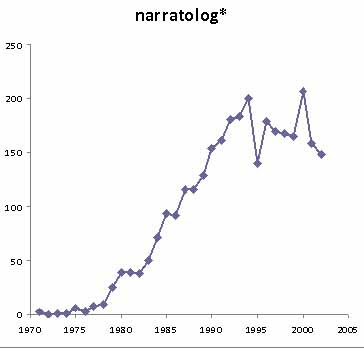

Previously I looked at changing fashions in academic theories and their associated buzzwords, using the articles archived in JSTOR as a sample: see part 1, part 2, and part 3. What about the thing that arts & humanities academics are supposed to study -- the text itself? I mean, the vulgar consuming public may flit from one "it" author to the next, but surely academics are above such fickleness?

Most of them are happy to admit that they don't make grand claims about Truth -- that's only what us evil science people do. But they don't freely admit to being driven mostly by a blind adherence to fashion -- whatever they're showing in Paris this season -- and it's time to strike back at them for this, after that knuckle-rapping they tried to give us in the '90s. Again, I've already showed how fashion works in their theories -- now it's time to show that their consumption patterns (i.e., which authors or artists they read and analyze) are also driven by fashion. Here is a graph using only English-language articles and reviews from the "Language and Literature" category of journals in JSTOR:  The search terms were the authors' surnames, except for Jane Austen, whose full name I searched. This presents no problem for Proust and Kafka, although Joyce is a bit more common as a surname. We don't have to worry about Joyce Carol Oates, as she became popular when James Joyce was declining in popularity. Still, it's clear that the order-of-magnitude increase in "Joyce" is due to James Joyce, as no one else with that name was so popular among professors. The graph starts at 1915 because 1914 is, according to an arts-major legend, the year that Modernism was born. I included Jane Austen for comparison. Even a traditional author like she shows ups and downs, although her popularity does not oscillate nearly as wildly as it does for the Modernists. She is clearly less popular than they are, though. From the mid-1920s to the mid-1940s, Joyce and Proust are neck and neck, but in the post-WWII period, Joyce has always been more popular -- for christ's sake, fully 10% of all Lang & Lit articles refer to him during 1970 - 1990. Even scientists were savvy enough to know that he was the guy you named something after just to prove how clever and initiated you were. Kafka is only slightly less popular than Proust -- which I find surprising, since Proust would seem to have much greater snob appeal, Kafka being the emo band whose posters you plastered your walls with in high school, but who you loudly deny ever having liked once you're a grown-up. Unfortunately I can't easily tell where these articles are coming from -- are the upper crust of arts departments writing mostly about Proust and Joyce, while the reject departments with no friends write mostly about Kafka and Salinger? I have no intuition here, so arts people, feel free to weigh in. At any rate, we see that, just as with their theoretical badges, academics make their consumption a fashion symbol too. Between 1935 and 1945, the three Modernists begin to soar in popularity, but somewhere between 1955 and 1965 they hit diminishing returns, peak around 1975, and get tossed out after that. Note that this is not due to the rise of Postmodernism -- that only got started in the mid-1970s and was big in the 1980s and '90s. Already by 1965, Modernist authors saw their growth slow down. Besides, Postmodernism was attacking the assumptions of another group of academics, rather than attacking a group of authors, painters, or musicians. The data only go up through 2001. Just eyeballing it, it's conceivable that by 2025, these three Modernists won't be given more respect than established authors like Jane Austen, and of course some may see their popularity plummet further to zero. This is a separate question from their artistic merit, obviously. For example, here's some insight into the popularity of Shakespeare in Samuel Pepys' London: [A]nd then to the King's Theatre, where we saw "Midsummer's Night's Dream," which I had never seen before, nor shall ever again, for it is the most insipid ridiculous play that ever I saw in my life. I saw, I confess, some good dancing and some handsome women, which was all my pleasure. A devil's advocate would say that academics gradually stopped writing about these authors because they'd exhausted what there is to say about them. But that's not true: the trajectories are too similar. They just happened to decide "we've gotten all we can" from all three authors at more or less the same time? That sounds, instead, like they just grew bored of the Modernists in general and only wore them out to formal events where they're de rigueur, rather than show them off to every stranger they chatted up at a cocktail party, academic conference, or public restroom. Labels: culture, intellectual history

Monday, September 29, 2008

Well, with the first post and a response to criticisms out of the way, I'll conclude with the graphs on some ideas that are gaining in popularity in the study of mankind. Where it says "social sciences," I've only searched JSTOR for the following journal categories: anthropology, economics, education, political science, psychology, and sociology. The social sciences, basically. (And I've used appropriate neutral comparisons as before.) The reason is that if "heritability" increases in usage, that could be due to its use in genetics -- I want to see how popular it is when talking about humans. (As before, graphs have simple titles, while the full search terms are listed in an Appendix.)

Contrary to what you might think, since about 1950 academics have become increasingly interested in the genetic influence on human nature, reversing a period of decline from roughly 1930 to 1950. There is also an apparent cyclical pattern on top of the increasing trend. Just make sure you refer to the heritability of "cognitive ability" rather than of "IQ" (see below). I've broken up the graphs on Darwin in the social sciences to make the trends clearer. There is an early phase in Victorian times when Darwin's thoughts were everywhere, especially in discussing human beings. Around the turn of the century, his ideas become less popular, as mentioned above. Around 1940, when his ideas come back due to the modern synthesis in biology, they become more popular in the social sciences as well. Indeed, since the mid-1940s, his ideas have only become more important to social scientists -- whether they like it or not. Notice that while "IQ" goes through cycles about an increasing trend, its synonym "cognitive ability" shows exponential increase. I assume that this is because "cognitive ability" is not a politicized term, while "IQ" is, resulting in outbreaks of hysteria where many more people of any ideological background begin talking a lot about it. The same is true of "sociobiology," which Leftist academics such as the Sociobiology Study Group tainted with negative political associations, compared to its synonym "evolutionary psychology." Now, someone will say that evolutionary psychology is different -- that it studies the mental, psychological processes rather than just observed behavior. But that's nonsense -- if you've read one of the many evolutionary psychology articles about digit ratios, waist-to-hip ratios, whether the female orgasm is adaptive, and so on, you know that mental processes and cognitive science models rarely come up, except in the study of vision. Indeed, "evolutionary psychology" increases at just the time when "sociobiology" decreases, in the mid-1980s, showing that the former is simply replacing the latter as the preferred term. As further evidence that a decline in usage means a decline in popularity, "evolutionary psychology" gets lots of hits in the 1890s when pioneers of psychology like William James were obsessed with integrating evolution and the study of the human mind, and takes a nosedive and lies dead once behaviorism takes over in psychology around the 1920s. Because "evolutionary psychology" and "cognitive ability" are safe terms politically, these are the obvious choices for people who don't want to have water poured over their head at a conference -- and the data show this rational choice. Interest has continued to skyrocket, although people use different codewords. Nothing like this turned up in the first post because it is not political suicide to talk about postmodernism or Marxism in academia -- but just try bringing up "IQ". It is fascinating that academics can adhere to the ideas of Marx, Lenin, Trotsky, or Stalin and be taken seriously, while anyone who would do so for the ideas of Mussolini or Hitler would be made a total pariah. I wouldn't take either numbskull seriously, but most educated people will, perhaps grudgingly, give a free pass to those who revere the ideological or political figures associated with The Other Great Dictatorships and Mass Murders. I've already made general observations in the first post, and they carry over here, especially the fact that the history of ideas seems so unaffected by the history of the entire outside world -- one more idea that Marx got wrong. There is clearly change, struggle between groups, and so on, but they are largely internal to academia. The future -- or the near-future anyway -- looks pretty bright for those interested in the biological approach to studying humans and their ways, and who believe things like IQ are important. Any students who are still considering the social constructionist, Marxist, feminist, or Whateverist approach should at least learn the new theories, if for no other reason than to be employable in 5 to 10 years. Hell, you might even consider it a kind of Pascal's Wager. APPENDIX Here are the search terms I used, once again searching the full text of articles and reviews: "cognitive ability" OR "cognitive abilities" "darwin*" NOT "social darwinism" NOT "social darwinist" NOT "social darwinists" "evolutionary psychology" OR "evolutionary psychologist" OR "evolutionary psychologists" "heritability" OR "heritable" "IQ" "sociobiolog*" Labels: culture, intellectual history

My first post detailed the demise of wooly-headed theories in academia. In this post, I'll also address some common criticisms that have come up so far. In the third post, just above this one, I will look at a rival class of theories, namely the scientific and in particular biological approaches to studying humanity. The take-home message is that, while the Blank Slate theories are slowly being driven out of academia, new ones based on the biological sciences are becoming ever more popular. But let's start with the criticisms:

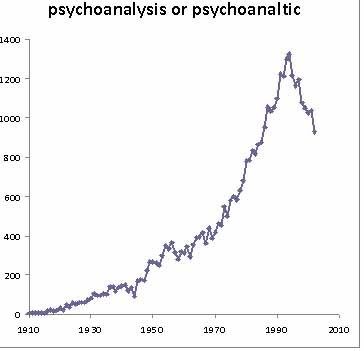

1) You're confusing popularity with accuracy, truth, etc. I never said anything to this effect. I am just interested in whether certain theories are becoming more or less prevalent. Now, I happen to believe that in the case of, say, psychoanalysis or Marxism, the theories are becoming less popular because people realize that they're not very insightful. And certainly what I think is a great theory could become unfashionable for whatever reason. Whether you're celebrating or mourning the death of some theory, I don't care -- I just want to show whether it is or is not dying. 2) You didn't account for the lag between when an article is published and when it is archived in JSTOR. I did do that, but I was only explicit about it in the comments to the first post. Journals in JSTOR have a "moving wall" between original and archived dates, with most having a lag of 3 to 5 years. Here is the distribution of lag times. By excluding data from 2003 onward, I've taken care of 88% of journals. And I don't want to hear a non-quantitative objection that "the remainder could be affecting the results" -- tell me what you think the data-point should be for, say 2001, and then derive how large of an effect the 12% of journals would have to have in order to get that value. We'll see how reasonable that sounds. Moreover, since no moving wall is greater than 10 years, any decline that started before 1998 is not subject to even this vague objection -- for example, Marxism, feminism, and psychoanalysis. 3) You don't have a neutral control case to show that Marxism is "really" decreasing in popularity. I did admit in the first post that ideally we'd have the total number of articles that JSTOR has for a given year, and that we'd divide the number of articles with Marxism by the total number of articles to get a frequency or prevalence. We can estimate the total by searching for articles with some highly frequent word, such as "the", so that the number returned is very close to the total. For "the", this approach is almost guaranteed to work, since almost no article would slip through the net. However, JSTOR has a list of highly frequent words that it doesn't allow. Still, not all common words are blocked. I consulted a frequency list compiled by Oxford Online, and chose the highest-ranking words there which are not blocked by JSTOR, though I excluded the personal pronouns and "people," since I don't expect those to show up much in hard science or social science journals. This gives the variants of "time," "know," "good," and "look." So, I've estimated the total number of articles for a year by searching for "time" OR "know*" OR "good*" OR "look*", where the asterisk means the word-ending can vary. How closely this estimates the true total is not of interest -- the point is that it serves as a common, neutral yardstick to measure the change from one year to the next. Interestingly, using this control has almost no effect on the shape of the graphs from the first post. That is because the increase in the total number of articles increases only linearly from about 1940 onward, whereas the articles on postmodernism increase or decrease exponentially -- and an exponential divided by a linear is still growing or dying very fast. I've redrawn the original graphs and posted them here because it's easier for me; sometime soon, I'll substitute them into the first post for the record. The only change I make to my original observations is that social constructionism is not so obviously declining anymore, although it is plateauing and apparently declining since 1998. If I had to guess about its behavior after 2002, I would say it's downward simply because none of the other theories plateaued for very long -- they quickly hit a peak and declined, so a steady high value does not appear to be stable for such theories. 4) You're mistaking a decline in usage with a decline in belief -- once the idea becomes taken for granted, practitioners stop referring to it explicitly. Just on an intuitive level, we know this is horseshit -- do physicists not use the words "gravity" or "electricity" anymore, or no longer refer to Newton? This objection exemplifies the problem with the average arts and humanities major: he is content to build a logically coherent argument without doing a quick reality check for its explanatory plausibility. I guess that's why they end up in law firms. But to provide evidence that usage tracks belief, here are some graphs for hard science keywords. In the case of Darwin, I excluded articles on "social Darwinism," which appears to be a, er, social construction in academia. See here. I have data on academic scarewords like "biological determinism," and perhaps in a future post I'll show those. Right now, I want to focus on articles that are at least somewhat level-headed. For ease of inspection, I've given each graph a simple title, and list the search terms at the end of this post in an Appendix. As the disciplines of population genetics and sociobiology have become staples of biology, mentioning them by name has not declined -- just the opposite. Because they are such thriving fields, writing about them explicitly has shot up. Darwin's thoughts were immensely popular in Victorian times, but they languished because no one could tell how to unite them with the study of heredity. That was, until the modern evolutionary synthesis, which began in the late 1930s -- since then, interest has exploded. The same goes for Mendel's thoughts -- no one knew what the physical basis for his "gene" idea was, until the relationship between the genetic code and DNA was laid out in the late 1950s. This shows that even hard science ideas can rise and fall and rise again, in these cases probably because some key aspect was found unsatisfying, until a later discovery fixed the problem, allowing the idea to become popular again. So there's hope for the unemployed psychoanalyst yet, assuming he can stick around for a half-century. APPENDIX Here are the search terms I used: "population genetic" OR "population genetics" OR "genetics of populations" "sociobiolog*" "darwin*" NOT "social darwinism" NOT "social darwinist" NOT "social darwinists" "mendel" OR "mendelian*" OR "mendelis*" NOT "mendelss*" I put the last restriction on the Mendel search because I got a lot of results about the composer Felix Mendelssohn. As with the first post, I searched the full text for both articles and reviews. Labels: culture, intellectual history

Monday, September 22, 2008

Graphs on the death of Marxism, postmodernism, and other stupid academic fads

posted by

agnostic @ 9/22/2008 12:17:00 AM

[Note: I'm rushing this out before the school week starts, as I need sleep, so if it seems unedited, that's why.]

We are living in very exciting times -- at long last, we've broken the stranglehold that a variety of silly Blank Slate theories have held on the arts, humanities, and social sciences. To some, this may sound strange, but things have decisively changed within the past 10 years, and these so-called theories are now moribund. To let those out-of-the-loop in on the news, and to quantify what insiders have already suspected, I've drawn graphs of the rise and fall of these fashions. I searched the archives of JSTOR, which houses a cornucopia of academic journals, for certain keywords that appear in the full text of an article or review (since sometimes the big ideas appear in books rather than journals). This provides an estimate of how popular the idea is -- not only the true believers, but their opponents too, will use the term. Once no one believes it anymore, then the adherents, opponents, and neutral spectators will have less occasion to use the term. I excluded data from 2003 onward because most JSTOR journals don't deposit their articles in JSTOR until 3 to 5 years after the original publication. Still, most of the declines are visible even as of 2002. Admittedly, a better estimate would be to measure the number of articles with the term in a given year, divided by the total number of articles that JSTOR has for that year, to yield a frequency. But I don't have the data on total articles. However, on time-scales when we don't expect a huge change in the total number of articles published -- say, over a few decades -- then we can take the total to be approximately constant and use only the raw counts of articles with the keyword. Crucially, although this may warp our view of an increasing trend -- which could be due to more articles being written in total, while the frequency of those of interest stays the same -- a sustained decline must be real. Here are the graphs (an asterisk means the word endings could vary):            Some thoughts: First, there are two exceptions to the overall pattern of decline -- orientalism and post-colonialism. The former may be declining, but it's hard to say one way or the other. The latter, though, was holding steady in 2002, although its growth rate had clearly slowed down, so its demise seems to be only a matter of time -- by 2010 at the latest, it should show a down-turn. Second, aside from Marxism, which peaked in 1988, and social constructionism, which declined starting in 2002 *, the others began to fall from roughly 1993 to 1998. It is astonishing that such a narrow time frame saw the fall of fashions that varied so much in when they were founded. Marxism, psychoanalysis, and feminism are very old compared to deconstruction or postmodernism, yet it was as though during the 1990s an academia-wide clean-up swept away all the bullshit, no matter how long it had been festering there. If we wanted to model this, we would probably use an S-I-R type model for the spread of infectious diseases. But we'd have to include an exogenous shock sometime during the 1990s since it's unlikely that epidemics that had begun 100 years apart would, of their own inner workings, decline at the same time. It's as if we started to live in sparser population densities, where diseases old and new could not spread so easily, or if we wandered onto an antibiotic that cured of us diseases, some of which had plagued us for much longer than others. Third, notice how simple most of the curves look -- few show lots of noise, or the presence of smaller-scale cycles. That's despite the vicissitudes of politics, economics, and other social changes -- hardly any of it made an impact on the world of ideas. I guess they don't call it the Ivory Tower for nothing. About the only case you could make is for McCarthyism halting the growth of Marxist ideas during most of the 1950s. The fall of the Berlin Wall does not explain why Marxism declined then -- its growth rate was already grinding to a halt for the previous decade, compared to its explosion during the 1960s and '70s. Still, it could be that there was a general anti-communist zeitgeist in the 1950s, so that academics would have cooled off to Marxism of their own accord, not because they were afraid of McCarthy or whoever else. Importantly, that's only one plausible link -- there are a billion others that don't pan out, so it may be that our plausible link happened due to chance: when you test 1000 correlations, 5 of them will be significant at the 0.005 level, even though they're only the result of chance. This suggests that a "great man theory" of intellectual history is wrong. Surely someone needs to invent the theory, and it may be complex enough that if that person hadn't existed, the theory wouldn't have existed (contra the view that somebody or other would've invented Marxism). After that, though, we write a system of differential equations to model the dynamics of the classes of individuals involved -- perhaps just two, believers and non-believers -- and these interactions between individuals are all that matter. How many persuasive tracts were there against postmodernism or Marxism, for example? And yet none of those convinced the believers since the time wasn't right. Postmodernism was already growing at a slower rate in 1995 when the Sokal Affair put its silliness in the spotlight, and even then its growth rate didn't decline even faster as a result. Kind of depressing for iconoclasts -- but at least you can rest assured that at some point, the fuckers will get theirs. Fourth, the sudden decline of all the big-shot theories you'd study in a literary theory or critical theory class is certainly behind the recent angst of arts and humanities grad students. Without a big theory, you can't pretend you have specialized training and shouldn't be treated as such -- high school English teachers may be fine with that, but if you're in grad school, that's admitting you failed as an academic. You want a good reputation. Isn't it strange, though, that no replacement theories have filled the void? That's because everyone now understands that the whole thing was a big joke, and aren't going to be suckered again anytime soon. Now the generalizing and biological approaches to the humanities and social sciences are dominant -- but that's for another post. Also, as you sense all of the big theories are dying, you must realize that you have no future: you'll be increasingly unable to publish articles -- or have others cite you -- and even if you became a professor, you wouldn't be able to recruit grad students into your pyramid scheme, or enroll students in your classes, since their interest would be even lower than among current students. Someone who knows more about intellectual history should compare arts and humanities grad students today to the priestly caste that was becoming obsolete as Europe became more rational and secular. I'm sure they rationalized their angst as a spiritual or intellectual crisis, just like today's grad students might say that they had an epiphany -- but in reality, they're just recognizing how bleak their economic prospects are and are opting for greener pastures. Fifth and last, I don't know about the rest of you, but I find young people today very refreshing. Let's look at 18 year-olds -- the impressionable college freshmen, who could be infected by their dopey professors. If they begin freshman year just 1 year after the theory's peak, the idea is still very popular, so they'll get infected. If we allow, say 5 years of cooling off and decay, professors won't talk about it so much, or will be use a less strident tone of voice, so that only the students who were destined to latch on to some stupid theory will get infected. Depending on the trend, this makes the safe cohort born in 1975 at the oldest (for Marxism), or 1989 at the youngest (for social constructionism). And obviously even among safe cohorts, some are safer than others -- people my age (27) may not go in for Marxism much, but have heard of it or taken it seriously at some point (even if to argue against it intellectually). But 18 year-olds today weren't even born when Marxism had already started to die. It's easy to fossilize your picture of the world from your formative years of 15 to 24, but things change. If you turned off the radio in the mid-late '90s, you missed four years of great rock and rap music that came out from 2003 to 2006 (although now you can keep it off again). If you write off dating a 21 year-old grad student on the assumption that they're mostly angry feminist hags, you're missing out. And if you'd rather socialize with people your own age because younger people are too immature to have an intelligent discussion -- ask yourself when the last time was that you didn't have to dance around all kinds of topics with Gen-X or Baby Boomer peers because of the moronic beliefs they've been infected with since their young adult years? Try talking to a college student about human evolution -- they're pretty open-minded. My almost-30 housemate, by comparison, was eager to hear that what I'm studying would show that there's no master race after all. What a loser. * I started the graph of social constructionism at 1960, even though it extends back to 1876, since it was always at a very low level before then (less than 5 per year, often 0). Including these points didn't make the recent decline so apparent in the graph, so out they went. Labels: culture, intellectual history |