|

Sunday, January 31, 2010

They've been signed for $10,000 per episode the next go around. Years ago Joel floated the idea of using Reality TV to test theories in social science. Paying the cast of Jersey Shore this much is going to mean that they'll be under serious pressure to produce high quality "product." I assume that means they're crank up the magnitude of their "character." For example, Ronnie Magro will be under pressure to beat up more d-bags next season. "The Situation" is going to have to do the nasty with even nastier.

Friday, January 29, 2010

A friend pointed me to this YouTube clip of a young red-haired man objecting to the term "ginger," and the opprobrium he's been subjected to since the South Park episode "Ginger Kids" popularized ideas such as the possibility that redheads have no soul. I assume the kid is joking. On the other hand, I have read that red-haired males are at some disadvantage on online dating sites, just like non-white males. Have any readers of the red persuasion ever felt put upon due to their rare pigment status?

A review of a new book, What Darwin Got Wrong. Co-authored by Jerry Fodor, who has been continuing his war against natural selection. I've already read Darwinian Fairytales: Selfish Genes, Errors of Heredity, and Other Fables of Evolution (at the suggestion of a reader who found the arguments within incredibly persuasive, convincing me to simply ignore anything that reader ever asserted after finishing the book), so I think I have my quota of philosopher-declaring-evolution-the-naked-emperor under my belt. Meanwhile, there are real scholars grappling with the issues which emerged in the wake of the Neo-Darwinian Synthesis and its discontents, and pushing science forward.

Yes, Darwin was wrong about many things. But how many scientists will still have such an impact 150 into the future? He's a big enough figure that people can sell books just by putting his name into the title! Only a few others fall into that class. Note: Here you can read a draft of the third chapter of What Darwin Got Wrong. Labels: Evolution

Thursday, January 28, 2010

Self-Control and Peer Groups:

However, according to a new study by Michelle vanDellen, a psychologist at the University of Georgia, self-control contains a large social component; the ability to resist temptation is contagious. The paper consists of five clever studies, each of which demonstrates the influence of our peer group on our self-control decisions. For instance, in one study 71 undergraduates watched a stranger exert self-control by choosing a carrot instead of a cookie, while others watched people eat the cookie instead of the carrot. That's all that happened: the volunteers had no other interaction with the eaters. Nevertheless, the performance of the subjects was significantly altered on a subsequent test of self-control. People who watched the carrot-eaters had more discipline than those who watched the cookie-eaters. I assume time preference is heritable (at least via its correlation with other traits such as IQ), but, that assumes you control background social and cultural variables. Labels: culture

What is the single best reference for refuting the notion that "race is only a social construct" for a non-scientist? I don't know. (Suggestions welcome in the comments.) But Neven Sesardic (previously praised here) does a marvelous job in "Race: A Social Destruction of a Biological Concept," (pdf) Biology and Philosophy (2010, forthcoming).

Nothing new for the GNXP faithful, but the presentation is clear and compelling throughout. He opens with "Those who subscribe to the opinion that there are no human races are obviously ignorant of modern biology." --- Ernst Mayr, 2002. Great quote!

Wednesday, January 27, 2010

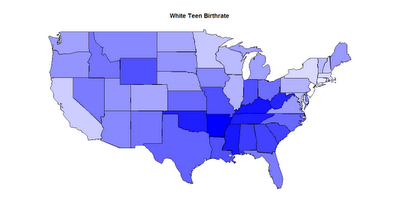

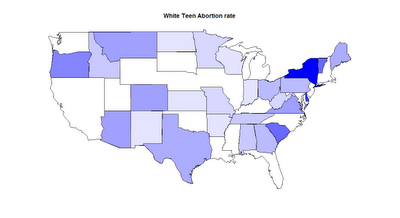

A supplement to the previous two posts. Below are maps which are shaded proportionally. Note how New York seems to be the abortion capital of the USA. Total surprise to me. Remember that these data are for white females from the ages of 15-19.

Labels: Teen Pregnancy

A reader pointed to this post in Free Exchange:

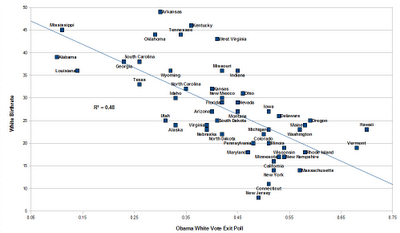

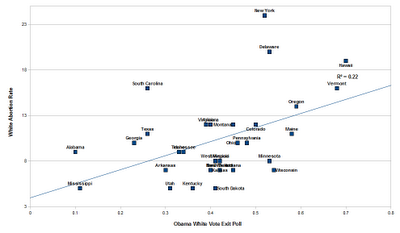

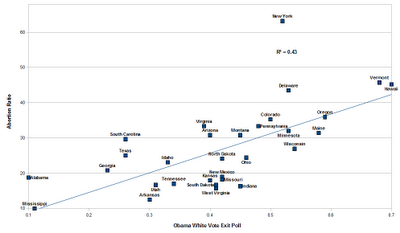

Here are the 15 states with the biggest percentage drop from 1988-2005 in the ratio of teen abortionsŌĆöthe percentage of teen pregnancies that ended in abortion, not counting miscarriages. Crudely put, these are the states where pregnant white teens stopped having abortions between 1988-2005. I have 2008 exit poll data handy by state, as well as the 2005 birth and abortion data. Abortion ratio = (Abortion rate)/(Abortion rate + Birthrate); basically pregnancy rate minus miscarriages. "Teen" here defines females in the age range 15-19. As you'd expect: 1) Whites voting Democrat is correlated with lower white birthrates 2) Whites voting Democrat is correlated with higher white abortion rates 3) Whites voting Democrat is correlated with higher white abortion ratios #3 is stronger than #2, and I believe that's because teen pregnancy rates are lower in areas where whites are strongly Democrat, so the abortion rates are also going to be lower. The abortion ratio is somewhat normalized to pregnancy rate.    Labels: Teen Pregnancy

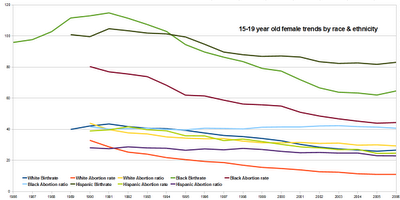

The New York Times has a new article, After Long Decline, Teenage Pregnancy Rate Rises. The graphic is OK, but it focuses on aggregate teen pregnancy rates (age group 15-19) instead of splitting it out so as to show births and abortions. The original report is chock full of tables, but not the charts I was looking for. So I decided to go ahead and create them. All the "teen" data is for the 15-19 age range. The trends are a bit difference from that in the chart because I split up births and abortions, and also added in "abortion ratio," which simply illustrates the proportion of pregnancies which result in abortions excluding miscarriage and stillbirths. The other rates are per 1,000 of females of the given age range. First, the overall trends by time, broken out by race & ethnicity. White = Non-Hispanic white in all that follows.

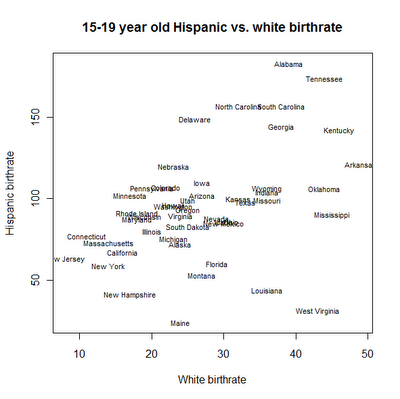

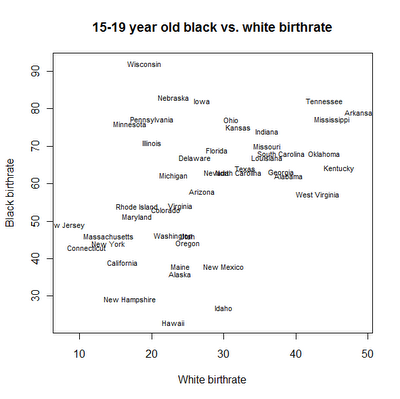

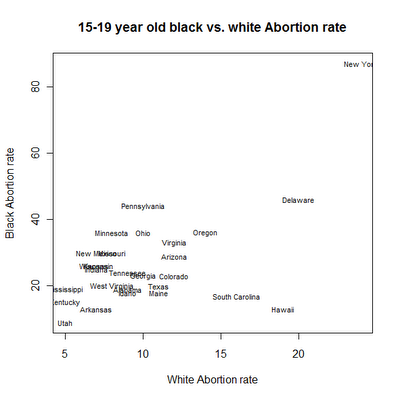

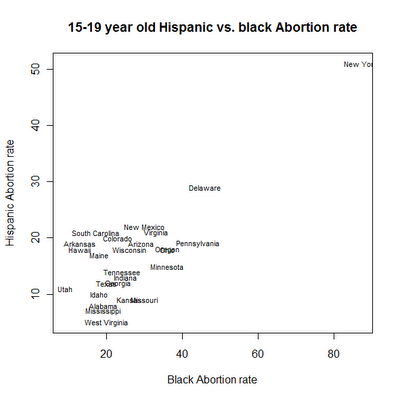

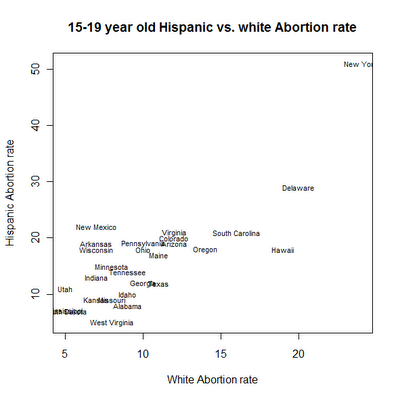

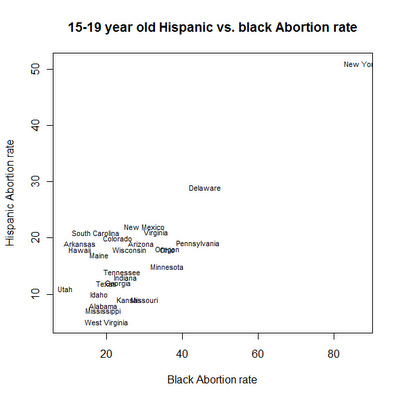

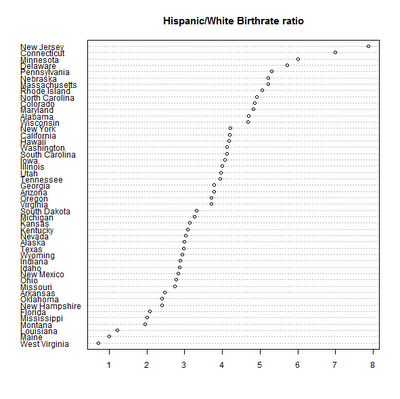

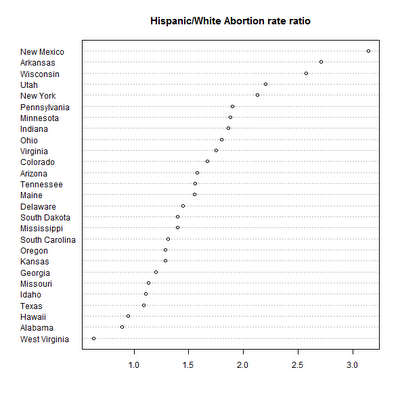

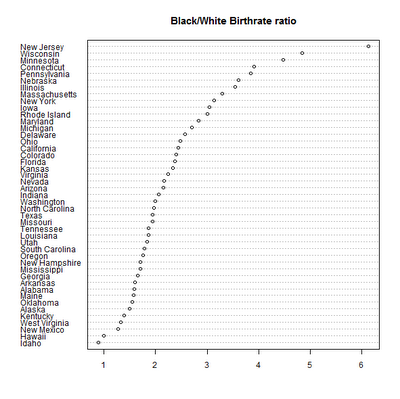

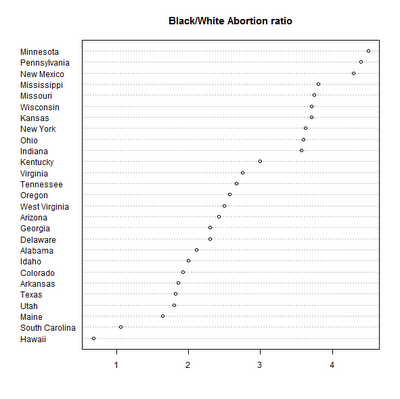

Since Latinos have high birthrates, so surprise that their abortion rate is higher than whites. On the other hand, the relatively low abortion ratio vis-a-vis white teens points to some cultural expectations among this group which we'd expect from Roman Catholics (though more generally Catholics don't differ much from Protestants in the United States in regards to abortion, so I think that this is less causal than correlated). There is also state level data, though it is spotty in regards to abortions. I decided to see if the different groups tracked each other in regards to rates. Here's what I found:

And the scatterplots, as well as some dot plots which show the ratio of the rates of two minority groups, as a function of geography.            Looking closely at the data it seems that that local state law/and/or/culture matters a lot for teen abortion ratios. Vermont for example has a very high abortion ratio. Might look at it later.... Note: I excluded DC from the state level analysis because it's a bizarre outlier. White teen birthrates of 1 per 1,000, black & Hispanic above 100. Labels: Teen Pregnancy

Tuesday, January 26, 2010

A bold prediction: "synthetic associations" are not a panacea

posted by

p-ter @ 1/26/2010 08:48:00 PM

There's a bit of press surrounding the interesting result from David Goldstein's group that, in certain situations, a number of "rare" (defined as an allele frequency less than 5% [1]) variants influencing a trait can lead to an association signal at "common" SNPs. This phenomenon they authors call a "synthetic association".

The authors claim this is potentially the cause of many of the associations found in genome-wide association studies (with common SNPs), as well as a potential solution to the "missing heritability problem" (this isn't mentioned in the paper itself, but rather in a Times article describing it). In other words, this could be a panacea for all the ills of the human genetics community. Unfortunately, this seems rather unlikely. 1. There are a range of parameter values for which "synthetic associations" are plausible--where the effect of the rare variants is small enough to have avoided detection by linkage studies but big enough to show up via correlation with common variants. This range of parameters is kind of small--from Figure 2, it looks like maybe a set of mutations at a gene with a genotypic relative risk greater than 2 but less than 6. Will this be the case for some loci? Sure, that sounds plausible. Is it going to explain everything? No, of course not. 2. It has been pointed out (rightly) that diseases that are selected against should have their genetic component enriched for rare variants. Goldstein himself has made this argument about diseases like schizophrenia. So if schizophrenia has all these rare variants, and rare variants cause rampant "synthetic associations" at common SNPs, why hasn't anyone picked up whopping associations using common SNPs in schizophrenia? 3. The sickle cell anemia example, as presented in the paper, is extremely misleading. It seems the authors did a simple case control test for sickle cell in an African-American population. Recall that African-Americans are an admixed population, with each individual carrying large chunks of "European" and "African" chromosomes. Anyone will sickle cell will have at least one block of African chromosome surrounding the beta-globin locus, while those without will have two chromosomes sampled from the overall distribution of chromosomes in the population--15-20% of which, approximately, will be of European descent [2]. So any SNP with an allele frequency difference between African and European populations in this region will show up as a highly significant association with the disease due to the way they've done the test, and these associations will extend out to the length of admixture linkage disequilibrium--well, well beyond the LD found in African populations alone. The presentation of this example in the paper--the large block of association contrasting with the small blocks of LD in the Yoruban population--is a bit silly. If I had to guess, and put a concrete bet on how this will play out, let's take the associations listed in their Table 1, which they call candidates for being due to synthetic associations. My bet: none of them are. Ok, maybe one. [1] These sorts of thresholds are important to watch--in a year people will be calling things at 1% frequency "common" if it suits them for rhetorical purposes. [2] Corrected from: "... will have two large blocks of "African" chromosomes surrounding the beta-globin locus, and everyone without will have at least one European chromosome in the same area"; see comments. Labels: Genetics

I noticed that a new biopic of Confucius just opened in China. It's pretty obvious that they "sexed up" his life, as you can see in the trailer. In terms of a big-budget biopic it seems to me that the life of Confucius is a very thin source of blockbuster material in relation to other social-religious figures of eminence. Jesus, Moses and Buddha have supernatural aspects to their lives. Muhammad's life offers the opportunity for set-piece battles. Confucius was in many ways a failed bureaucrat, a genius unrecognized in his own day. His life can't be easily appreciated unless you have the proper context of his impact on Chinese history in mind. Stepping into it without a grand frame can lead one to conclude that he was quite a pedestrian man. Confucius was a man of ideas (though even those ideas can seem somewhat obscure, e.g. rectification of names). You see this in Annping Chin's The Authentic Confucius: A Life of Thought and Politics; I can't imagine Karen Armstrong writing such a dense and slow book.

Labels: Confucianism

Monday, January 25, 2010

'Jersey Shore' -- MTV Tries to Divide and Conquer:

Sources tell TMZ the network has told the cast if they don't accept MTV's deal by the end of business Monday, they will be replaced. And, MTV has told them it does not have to be a package deal -- the cast members who accept the offer will stay ... those who do not can have a nice life. Labels: Jersey Shore

Sunday, January 24, 2010

Frequency of lactose malabsorption among healthy southern and northern Indian populations by genetic analysis and lactose hydrogen breath and tolerance tests:

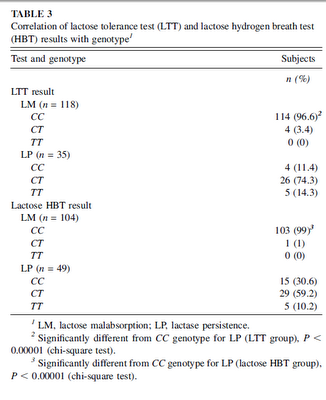

Volunteers from southern and northern India were comparable in age and sex. The LTT result was abnormal in 88.2% of southern Indians and in 66.2% of northern Indians...The lactose HBT result was abnormal in 78.9% of southern Indians and in 57.1% of northern Indians...The CC genotype was present in 86.8% and 67.5%...the CT genotype was present in 13.2% and 26.0%...and the TT genotype was present in 0% and 6.5%..of southern and northern Indians, respectively. The frequency of symptoms after the lactose load...and peak concentrations of breath hydrogen...both of which might indicate the degree of lactase deficiency, were higher in southern than in northern Indians.  The north Indian samples were from Lucknow on the mid-Gangetic plain, and the south Indian samples from Bangalore. The genetic variant conferring lactase persistence is the Central Asian one, T-1390. You can see the distribution of the genotypes by phenotype in the table to the left. These authors assume that the T allele was brought by the Indo-Aryans; this seems plausible seeing its clinal variation, as well the fact that this variant seems to be common in European and Central Asian populations. The frequency of the T allele in the Lucknow sample was 39%, and 13% in the Bangalore sample. Here are a selection of frequencies for the T allele in other populations: The north Indian samples were from Lucknow on the mid-Gangetic plain, and the south Indian samples from Bangalore. The genetic variant conferring lactase persistence is the Central Asian one, T-1390. You can see the distribution of the genotypes by phenotype in the table to the left. These authors assume that the T allele was brought by the Indo-Aryans; this seems plausible seeing its clinal variation, as well the fact that this variant seems to be common in European and Central Asian populations. The frequency of the T allele in the Lucknow sample was 39%, and 13% in the Bangalore sample. Here are a selection of frequencies for the T allele in other populations:17% - Saami You can see more here. This looks like a case of local adaptation. Labels: India, Population genetics

Thursday, January 21, 2010

What era are our intuitions about elites and business adapted to?

posted by

agnostic @ 1/21/2010 01:36:00 AM

Well, just the way I asked it, our gut feelings about the economically powerful are obviously not a product of hunter-gatherer life, given that such societies have minimal hierarchy, and so minimal disparities in power, material wealth, privileges of all kinds, etc. Hunter-gatherers don't even tolerate would-be elite-strivers, so beyond a blanket condemnation of trying to be a big-shot, they don't have the subtler attitudes that agricultural and industrial people do -- these latter groups tolerate and somewhat respect elites but resent and envy them at the same time.

So that leaves two major eras -- agricultural and industrial societies. I'm going to refer to these instead by terms that North, Wallis, & Weingast use in their excellent book Violence and Social Orders. Their framework for categorizing societies is based on how violence is controlled. In the primitive social order -- hunter-gatherer life -- there are no organizations that prevent violence, so homicide rates are the highest of all societies. At the next step up, limited-access social orders -- or "natural states" that sprung up with agriculture -- substantially reduce the level of violence by giving the violence specialists (strongmen, mafia dons, etc.) an incentive to not go to war all the time. Each strongman and his circle of cronies has a tacit agreement with the other strongmen -- who all make up a dominant coalition -- that I'll leave you to exploit the peasants living on your land if you leave me to exploit the peasants on my land. This way, the strongman doesn't have to work very much to live a comfortable life -- just steal what he wants from the peasants on his land, and protect them should violence break out. Why won't one strongman just raid another to get his land, peasants, food, and women? Because if this type of civil war breaks out, everyone's land gets ravaged, everyone's peasants can't produce much food, and so every strongman will lose their easy source of free goodies (rents). The members of the dominant coalition also agree to limit access to their circle, to limit people's ability to form organizations, etc. If they let anybody join their group, or form a rival coalition, their slice of the pie would shrink. And this is a Malthusian economy, so the pie isn't going to get much bigger within their lifetimes. So by restricting (though not closing off) access to the dominant coalition, each member maintains a pretty enjoyable size of the rents that they extract from the peasants. Why wouldn't those outside the dominant coalition not try to form their own rival group anyway? Because the strongmen of the area are already part of the dominant coalition -- only the relative wimps could try to stage a rebellion, and the strongmen would immediately and violently crush such an uprising. It's not that one faction of the coalition will never raid another, just that this will be rare and only when the target faction has lost some of its share in the balance of power -- maybe they had 5 strongmen but now only 1. Obviously the other factions aren't going to let that 1 strongman enjoy the rents that 5 were before, while they enjoy average rents -- they're going to raid him and take enough so that he's left with what seems his fair share. Aside from these rare instances, there will be a pretty stable peace. There may be opportunistic violence among peasants, like one drunk killing another in a tavern, but nothing like getting caught in a civil war. And they certainly won't be subject to the constant threat of being killed and their land burned in a pre-dawn raid by the neighboring tribe, as they would face in a stateless hunter-gatherer society. As a result, homicide rates are much lower in these natural states than in stateless societies. Above natural states are open-access orders, which characterize societies that have market economies and competitive politics. Here access to the elite is open to anyone who can prove themselves worthy -- it is not artificially restricted in order to preserve large rents for the incumbents. The pie can be made bigger with more people at the top, since you only get to the top in such societies by making and selling things that people want. Elite members compete against each other based on the quality and price of the goods and services they sell -- it's a mercantile elite -- rather than based on who is better at violence than the others. If the elites are flabby, upstarts can readily form their own organizations -- as opposed to not having the freedom to do so -- that, if better, will dethrone the incumbents. Since violence is no longer part of elite competition, homicide rates are the lowest of all types of societies. OK, now let's take a look at just two innate views that most people have about how the business world works or what economic elites are like, and see how these are adaptations to natural states rather than to the very new open-access orders (which have only existed in Western Europe since about 1850 or so). One is the conviction, common even among many businessmen, that market share matters more than making profits -- that being more popular trumps being more profitable. The other is most people's mistrust of companies that dominate their entire industry, like Microsoft in computers. First, the view that capturing more of the audience -- whether measured by the portion of all sales dollars that head your way or the portion of all consumers who come to you -- matters more than increasing revenues and decreasing costs -- boosting profits -- remains incredibly common. Thus we always hear about how a start-up must offer their stuff for free or nearly free in order to attract the largest crowd, and once they've got them locked in, make money off of them somehow -- by charging them later on, by selling the audience to advertisers, etc. This thinking was widespread during the dot-com bubble, and there was a neat management-oriented book written about it called The Myth of Market Share. Of course, that hasn't gone away since then, as everyone says that "providers of online content" can never charge their consumers. The business model must be to give away something cool for free, attract a huge group of followers, and sell this audience to advertisers. (I don't think most people believe that charging a subset for "premium content" is going to make them rich.) For example, here is Felix Salmon's reaction to the NYT's official statement that they're going to start charging for website access starting in 2011: Successful media companies go after audience first, and then watch revenues follow; failing ones alienate their audience in an attempt to maximize short-term revenues. Wrong. YouTube is the most popular provider of free media, but they haven't made jackshit four years after their founding. Ditto Wikipedia. The Wall Street Journal and Financial Times websites charge, and they're incredibly profitable -- and popular too (the WSJ has the highest newspaper circulation in the US, ousting USA Today). There is no such thing as "go after audiences" -- they must do that in a way that's profitable, not just in a way that makes them popular. If you could "watch revenues follow" by merely going after an audience, everyone would be billionaires. The NYT here seems to be voluntarily giving up on all its readers outside the US, who canŌĆÖt be reasonably expected to have the ability or inclination to pay for web access. It had the opportunity to be a global newspaper, leveraging both the NYT and the IHT brands, and has now thrown that away for the sake of short-term revenues. This sums up the pre-industrial mindset perfectly: who cares about getting paid more and spending less, when what truly matters is owning a brand that is popular, influential, and celebrated and sucked up to? In a natural state, that is the non-violent path to success because you can only become a member of the dominant coalition by knowing the right in-members. They will require you to have a certain amount of influence, prestige, power, etc., in order to let you move up in rank. It doesn't matter if you nearly bankrupt yourself in the process of navigating these personalized patron-client networks because once you become popular and influential enough, you stand a good chance of being allowed into the dominant coalition and then coasting on rents for the rest of your life. Clearly that doesn't work in an open-access, competitive market economy where interactions are impersonal rather than crony-like. If you are popular and influential while paying no attention to costs and revenues, guess what -- there are more profit-focused competitors who can form rival companies and bulldoze over you right away. Again look at how spectacularly the WSJ has kicked the NYT's ass, not just in crude terms of circulation and dollars but also in terms of the quality of their website. They broadcast twice-daily video news summaries and a host of other briefer videos, offer thriving online forums, and on and on. Again, in the open-access societies, those who achieve elite status do so by competing on the margins of quality and price of their products. You deliver high-quality stuff at a low price while keeping your costs down, and you scoop up a large share of the market and obtain prestige and influence -- not the other way around. In fairness, not many practicing businessmen fall into this pre-industrial mindset because they won't be practicing for very long, just as businessmen who cried for a complete end to free trade would go under. It's mostly cultural commentators who preach the myth of market share, going with what their natural-state-adapted brain reflexively believes. Next, take the case of how much we fear companies that comes to dominate their industry. People freak out because they think the giant, having wiped out the competitors, will enjoy a carte blanche to exploit them in all sorts of ways -- higher prices, lower output, shoddier quality, etc. We demand the protector of the people to step in and do something about it -- bust them up, tie them down, resurrect their dead competitors, just something! That attitude is thoroughly irrational in an open-access society. Typically, the way you get that big is that you provided customers with stuff that they wanted at a low price and high quality. If you tried to sell people junk that they didn't want at a high price and terrible quality, guess how much of the market you will end up commanding. That's correct: zero. And even if such a company grew complacent and inertia set in, there's nothing to worry about in an open-access society because anyone is free to form their own rival organization to drive the sluggish incumbent out. The video game industry provides a clear example. Atari dominated the home system market in the late '70s and early '80s but couldn't adapt to changing tastes -- and were completely destroyed by newcomer Nintendo. But even Nintendo couldn't adapt to the changing tastes of the mid-'90s and early 2000s -- and were summarily dethroned by newcomer Sony. Of course, inertia set in at Sony and they have recently been displaced by -- Nintendo! It doesn't even have to be a newcomer, just someone who knows what people want and how to get it to them at a low price. There was no government intervention necessary to bust up Atari in the mid-'80s or Nintendo in the mid-90s or Sony in the mid-2000s. The open and competitive market process took care of everything. But think back to life in a natural state. If one faction obtained complete control over the dominant coalition, the ever so important balance of power would be lost. You the peasant would still be just as exploited as before -- same amount of food taken -- but now you're getting nothing in return. At least before, you got protection just in case the strongmen from other factions dared to invade your own master's land. Now that master serves no protective purpose. Before, you could construe the relationship as at least somewhat fair -- he benefited you and you benefited him. Now you're entirely his slave; or equivalently, he is no longer a partial but a 100% parasite. You can understand why minds that are adapted to natural states would find market domination by a single or even small handful of firms ominous. It is not possible to vote with your dollars and instantly boot out the market-dominator, so some other Really Strong Group must act on your behalf to do so. Why, the government is just such a group! Normal people will demand that vanquished competitors be restored, not out of compassion for those who they feel were unfairly driven out -- the public shed no tears for Netscape during the Microsoft antitrust trial -- but in order to restore a balance of power. That notion -- the healthy effect for us normal people of there being a balance of power -- is only appropriate to natural states, where one faction checks another, not to open-access societies where one firm can typically only drive another out of business by serving us better. By the way, this shows that the public choice view of antitrust law is wrong. The facts are that antitrust law in practice goes after harmless and beneficial giants, hamstringing their ability to serve consumers. There is little to no evidence that such beatdowns have boosted output that had been falling, lowered prices that had been rising, or improved quality that had been eroding. Typically the lawsuits are brought by the loser businesses who lost fair and square, and obviously the antitrust bureaucrats enjoy full employment by regularly going after businesses. But we live in a society with competitive politics and free elections. If voters truly did not approve of antitrust practices that beat up on corporate giants, we wouldn't see it -- the offenders would be driven out of office. And why is it that only one group of special interests gets the full support of bureaucrats -- that is, the loser businesses have influence with the government, while the winner business gets no respect. How can a marginal special interest group overpower an industry giant? It must be that all this is allowed to go on because voters approve of and even demand that these things happen -- we don't want Microsoft to grow too big or they will enslave us! This is a special case of what Bryan Caplan writes about in The Myth of the Rational Voter: where special interests succeed in buying off the government, it is only in areas where the public truly supports the special interests. For example, the public is largely in favor of steel tariffs if the American steel industry is suffering -- hey, we gotta help our brothers out! They are also in favor of subsidies to agribusiness -- if we didn't subsidize them, they couldn't provide us with any food! And those subsidies are popular even in states where farming is minimal. So, such policies are not the result of special interests hijacking the government and ramrodding through policies that citizens don't really want. In reality, it is just the ignorant public getting what it asked for. It seems useful when we look at the systematic biases that people have regarding economics and politics to bear in mind what political and economic life was like in the natural state stage of our history. Modern economics does not tell us about that environment but instead about the open-access environment. (Actually, there's a decent trace of it in Adam Smith's Theory of Moral Sentiments, which mentions cabals and factions almost as much as Machiavelli -- and he meant real factions, ones that would war against each other, not the domesticated parties we have today.) We obviously are not adapted to hunter-gatherer existence in these domains -- we would cut down the status-seekers or cast them out right away, rather than tolerate them and even work for them. At the same time, we evidently haven't had enough generations to adapt to markets and governments that are both open and competitive. That is certain to pull our intuitions in certain directions, particularly toward a distrust of market-dominating firms and toward advising businesses to pursue popularity and influence more than profitability, although I'm sure I could list others if I thought about it longer. Labels: Economic History, Economics, Evolutionary Psychology, History, politics, Psychology

Athlete Atypicity on the Edge of Human Achievement: Performances Stagnate after the Last Peak, in 1988:

The growth law for the development of top athletes performances remains unknown in quantifiable sport events. Here we present a growth model for 41351 best performers from 70 track and field (T&F) and swimming events and detail their characteristics over the modern Olympic era. We show that 64% of T&F events no longer improved since 1993, while 47% of swimming events stagnated after 1990, prior to a second progression step starting in 2000. Since then, 100% of swimming events continued to progress. Labels: Sports

A comment below:

This thought has probably occurred to others as well, but isn't it interesting that if this theory of Baltics being the "true" Europeans is correct, that history repeated itself several thousand years later when the Baltic peoples became the last Europeans...to adopt Christianity, a Middle Eastern Religion? There must be something repelling about the region. This is a good point. The Baltic sea is a bit closer on a straight line than Ireland and Scotland, but pre-modern transport and communication was much more dependent on water. The comment references the fact that Lithuania became a Christian nation only in the late 1300s. Some of this was a matter of geopolitics, the Baltic peoples were being subjected to what we'd probably term genocidal assaults from various German military orders whose notional rationale was to spread the Christian faith in a series of crusades. Christianity was therefore broadly construed as the religion of the enemy, with the Christian God being termed the "German god" in some cases. But another issue was that in the 14th century Lithuania expanded into the lands of the West and East Slavs, Roman Catholics and Eastern Orthodox Christians, respectively, and the religious neutrality of the Lithuanian elite allowed them to play off the two subject populations usefully. Once the Lithuanian elite chose Roman Catholicism, it began the centuries long process of total assimilation of that elite into the Polish nobility and their alienation from their East Slavic Orthodox subjects and allies (the final completion of which occurred after the Union of Lublin). But even accounting for the historical and geopolitical contingencies, the relatively late conversion of the Baltic peoples to Roman Catholic Christianity is notable. The Sami of northern Fenno-Scandinavia actually were only nominally Christianized during the medieval period, and maintained their shamanistic indigenous religion until the 18th century (along with a few beliefs and practices adopted from their Norse neighbors, there are woodblocks which seem to depict Sami venerating an idol of Thor). One Finnic group in Russia actually claims an unbroken line of non-Christian religious belief & practice down to the modern day. The lesson here is that it is in northeastern Europe that the homogenizing processes which swept across much of the rest of the continent were felt last, and to the least effect. Why? I assume it's ecology. Or as some would say, maybe its agriculture (or the lack thereof). The climate of northeastern Europe is very unsuitable for crops which originate in the Middle East, so it would take some time for them to adapt them appropriately. The historical and genetic data imply a relatively recent push of Slavic-speaking farmers into northeastern European Russia. A model which posits that northeastern Europeans are particularly deep reservoirs of ancient European lineages would rely on the ecological parameters as the primary reason. The parallelism between the spread of cultures and genes here is notable, because the two often interact. Just as northeastern Europe may be the last redoubt of the hunter-gatherer relict populations within the continent, so it was also the region which was the last to join the medieval "Christian Commonwealth." In Guns, Germs and Steel Jared Diamond introduced the importance of geography in understanding historical patterns. This is quite clear when it comes to something like the history of the native populations of the New World; the critical role of environment in the lives of aboriginal peoples is something we're preconditioned to assume as an important background parameter. But the same factors are at work in Europe, in both prehistory and history. Labels: History

Tuesday, January 19, 2010

The title says it all, Should Obese, Smoking and Alcohol Consuming Women Receive Assisted Reproduction Treatment? The press release is based on a position statement from the European Society of Human Reproduction and Embryology. The link is here (not live yet).

Labels: Ethics

Over at my other blog I have a review up of a new paper in PLoS Biology. The authors argue that a particular Y haplogroup lineage, R1b1b2, which has often been assumed to be a marker of indigenous Paleolithic Europeans (i.e., those who were extant before the rise of agriculture and the spread of farmers), is actually a signature of Anatolians who brough agriculture. This probably isn't too surprising for the genetic genealogy nuts among the readers. After I got a copy of this paper I poked around the internet and the general finding that R1b1b2 was very diverse in the eastern Mediterranean seems to have been well known among the genetic genealogy community (also see Anatole Klyosov's paper and what he says about Basques specifically). And then in eastern Europe you have R1a1, which seems to have also undergone recent range expansion. Finally, there are the recent rumblings out of ancient DNA extraction which imply a lot of turnover of mtDNA lineages during the shift from hunter-gathering to agriculture.

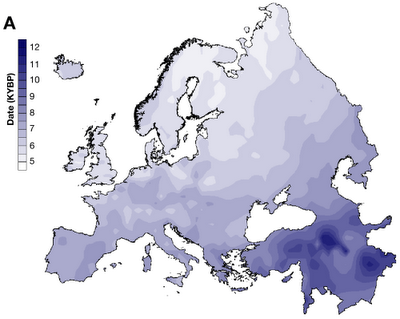

I think this makes us reconsider the idea that most of the ancestors of contemporary citizens of the European Union who were alive 10,000 years ago were actually resident within the current borders of the European Union. But let's put the details of that aside for a moment. Which group might be most representative of Paleolithic Europeans? If the paper above is correct, the Basques are not a good proxy for the ancient hunter-gatherers of Europe. I think this makes us reconsider the idea that most of the ancestors of contemporary citizens of the European Union who were alive 10,000 years ago were actually resident within the current borders of the European Union. But let's put the details of that aside for a moment. Which group might be most representative of Paleolithic Europeans? If the paper above is correct, the Basques are not a good proxy for the ancient hunter-gatherers of Europe.Let's look at a map which illustrates the spread of agriculture. I'd always focused on the SE-NW cline, but if the U5 mtDNA haplogroup is a reasonable marker of ancient pre-agricultural Europeans, we need to look at the Finnic peoples of the northeast. This may explain why these populations also tend to be genetically distinct from other European groups; not because they're an exotic admixture, but because they're not. Anyway, simply speculation, I'm sure readers will have their opinions.... Labels: Archaeology, European genetics, Finn baiting

Monday, January 18, 2010

John Hawks has some commentary on a Nicholas Wade article which previews a new paper on long term effective population size in humans, soon to be out in PNAS (Wade's piece states that it'll be out tomorrow, but it's PNAS). Wade states:

They put the number at 18,500 people, but this refers only to breeding individuals, the "effective" population. The actual population would have been about three times as large, or 55,500. Assuming an average census size on the order of 50,000, it seems as if our species stumbled onto a rather "risky" strategy of avoiding extinction. From what I recall conservation biologists start to worry about random stochastic events (e.g., a virulent disease) driving a species to extinction once its census size reaches 1,000. I suppose the fact that we were spread out over multiple continents would have mitigated the risk, but still.... It also brings me back to my post from yesterday, it seems that for most of human history we are a miserable species on the margins of extinction. For the past 10,000 years we were a miserable species. And now a substantial proportion of us are no long miserable (it seems life is actually much improved from pre-modern Malthusianism outside of Africa and South Asia). If only Leibniz could have seen it! Labels: Population genetics

Steve points me to an except from E. O. Wilson's new ant novel in The New Yorker. In the late 1990s I read Empire of the Ants, which had a significant ant-centric aspect. A friend who later went on to do graduate work in entemology borrowed it from me, and she must have liked it because I never did get it back. I have a feeling that Wilson's Anthill isn't going to become a classic like Watership Down, but I'd be happy to be proven wrong....

Labels: Ants, E. O. Wilson

Sunday, January 17, 2010

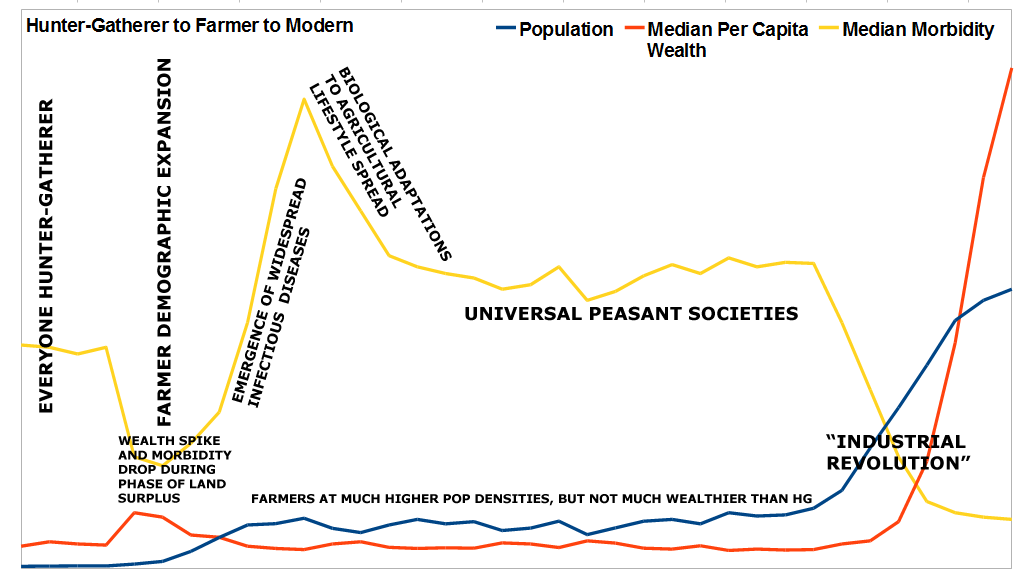

In the comments below I was outlining a simple model which really is easiest to communicate with a chart. I removed the labels on the Y and X axes because the details don't matter, the X axis is simply "time," and the Y axis simply reflects the magnitudes of the three trendlines. The key is to focus on the relationship between the three. I've labeled for clarity, but more verbal exposition below....

For most of the human history we've been hunter-gatherers. But over the past 10,000 years there was a switch in lifestyle, farming has emerged independently in several locations, and filled in all the territory in between. One truism of modern cultural anthropology is that this was a big mistake, that hunter-gatherer lifestyles were superior to those of peasant farmers, less miserable with much more free time. I think this is somewhat unsubtle, which is ironic since cultural anthropologists really love to deconstruct the errors of others which they themselves are guilty of (i.e., in this case, the normative aspect immediately jumps out in the scholarship. There's little doubt as to who they're "rooting" for). I am willing to grant that the median hunter-gatherer exhibits somewhat less morbidity than the median farmer. They're taller and have better teeth than farmers. Compared to modern people living in developed countries though the differences will seem trivial, so remember that we're talking on the margins here. So why did societies transition from hunter-gathering to farming? I doubt there's one simple answer, but there are some general facts which are obvious. There's plenty of evidence that farming supports many more people per unit of land, so in pure demographic terms hunter-gathering was bound to be doomed. They didn't have the weight of numbers. But why did the initial farmers transition from being hunter-gatherers to farmers in the first place? Because I think that farming was initially the rational individual choice, and led to more potential wealth and reproductive fitness. Remember, there's a big difference between existing in a state of land surplus and one of labor surplus. American farmers were among the healthiest and most fertile human populations which had ever lived before the modern era. Pioneers had huge families, and continued to push out to the frontier. This was not the lot of Russian serfs or Irish potato farmers. But eventually frontiers close, and Malthusian logic kicks in. The population eventually has nowhere to go, and the surplus of land disappears. At this point you reach a "stationary state," where a peasant society oscillates around its equilibrium population. I suspect that new farming populations which slam up against the Malthusian limit suffered even more misery than their descendants. This is because I believe that their demographic explosion had outrun their biological and cultural capacity to respond to the consequences of the changes wrought upon their environment. First and foremost, disease. During the expansionary phase densities would have risen, and infectious diseases would have begun to take hold. But only during the stationary state would they become truly endemic as populations become less physiologically fit due to nutritional deficiencies. The initial generations of farmers who reached the stationary state would have been ravaged by epidemics, to which they'd only slowly develop immunological responses (slowly on a human historical scale, though fast on a evolutionary one). This is even evident in relatively recent historical period; Italians developed biological and cultural adaptations to the emergence of malaria after the fall of Rome (in terms of culture, there was a shift toward settlement in higher locations). But there would be more to adapt to than disease. Diet would be a major issue. During the expansionary phase it seems plausible that farmers could supplement their cereal based diet with wild game. But once they hit the stationary phase they would face the trade-off between quantity and quality in terms of their foodstuffs. Hunter-gathering is relatively inefficient, and can't extract as many calories per unit out of an acre (at least an order of magnitude less), but the diet tends to be relatively balanced, rich in micronutrients, and often fats and protein as well. The initial shock to the physiology would be great, but over time adaptations would emerge to buffer farmers somewhat from the ill effects of their deficiencies. This is one hypothesis for the emergence of light skin, as a way to synthesize vitamin D endogenously, as well as greater production of enzymes such as amylase and persistence of lactase, which break down nutrients which dominate the diet of agriculturalists. Once societies reached a stationary state it would take great shocks to push them to a position where becoming hunter-gatherers again might be an option. A population drop of 50%, not uncommon due to plague or political collapse, would still not be low enough so that the remaining individuals would be able to subsist upon game and non-cultivated plant material. Additionally the ecology would surely have been radically altered so that many of the large game animals which might have been the ideal sources of sustenance in the past would be locally extinct. The collapse of the Western Roman Empire and the highland Maya city-states seems to have resulted in lower population densities and reduced social complexity, but in both regions agriculture remained dominant. On the other hand, there is some evidence that the Mississippian societies might have experienced die offs on the order of 90% due to contact with Spanish explorers, and later ethnography by European settlers suggests much simpler tribal societies than what the Spaniards had encountered. Though these tribal groupings, such as the Creeks, still knew how to farm, it seems that judging from the conflicts which emerged due to European encroachment on hunting grounds that this population drop was great enough to allow for a greater reversion to the pre-agricultural lifestyle than was able to occur elsewhere. But then the pre-Columbian exchange and the exposure of native populations to the 10,000 years of Eurasian pathogen evolution was to some extent a sui generis event. The model I highlight above is very stylized, and I am aware that most societies go through multiple cycles of cultural, and possibly biological, adaptation. The putative massive die off of native populations of the New World may have resulted in a reversion to simpler hunter-gatherer cultural forms in many regions (North America, the Amazon), and also reduced morbidity as diets once more became diversified and indigenous infectious diseases abated due to increased physiological health and decreased population density. In China between 1400 and 1800 there was a massive expansion of population beyond the equilibrium established between the Han and Song dynasties. The reasons for this are manifold, but one was perhaps the introduction of New World crops. Clearly in Ireland the introduction of the potato initially resulted in greater health for the population of that island, as in the 18th century the Irish were taller than the English because of their enthusiastic adoption of the new crop. Over the long term, at least from the perspective of contemporary humans, agriculture was not a disaster. Dense populations of farmers eventually gave rise to social complexity and specialization, and so greater productivity. Up until the 19th century productivity gains were invariably absorbed by population growth or abolished by natural responses (e.g., in the latter case Sumerian irrigation techniques resulted in gains in productivity, but these were eventually diminished by salinization which entailed a shift from wheat to barley, and eventually an abandonment of many fields). Additionally, the rise of mass society almost immediately birthed kleptocratic rentier classes, as well as rigid social forms which constrained human individual choice and possibilities for self-actualization. The noble savage who lives in poverty but has freedom may look ludicrous to us today, but from the perspective of an 18th century peasant who lives in poverty but has no freedom it may seem a much more appealing model (though these sorts of ideas were in any case the purview of leisure classes).* But 10,000 years of crooks who innovate so that they could continue to steal more efficiently eventually gave rise to what we call modern capitalism, which broke out of the zero-sum mentality and banished Malthusian logic, at least temporarily (remember that it is critical to note that population growth leveled off after the demographic transition, which allowed us to experience the gains in productivity as wealth and not more humans). I should qualify this though by noting that we can't know if the emergence of modern capitalism was inevitable in a historical sense. It happened once in Western Europe, and has spread through the rest of the world through emulation (Japan) or demographic expansion (the United States). The only possible "close call" was Song China, which had many of the institutional and technological preconditions, but never made the leap (whether that was because of the nature of the Chinese bureaucratic state or the disruption of the Song path toward capitalism by the Mongol conquest, we'll never know). By contrast, the spread of agriculture was likely inevitable.** Agricultural emerged at least twice, in the Old and New World, and likely multiple times in the Old World. Kings, armies and literacy, and many of the accoutrements of what we would term "civilization" arose both in the Old and New World after the last Ice Age. In all likelihood a confluence of biological, cultural and ecological conditions which were necessary for the rise of agricultural civilization were all in place ten thousand years ago. This also suggests that certain biological adaptations (e.g., lactase persistence) were also inevitable. * Though from what I have read hunter-gatherers are strongly constrained by their own mores in a manner which rivals that of traditional peasant societies; only they have no priests who have written the customs down and serve as interpreters. Rather, it is the band (mob?) which arbitrates. ** We have data on independent shifts toward agricultural lifestyles. We don't have data on independent shifts toward modern capitalist economies. I suspect that the shift toward capitalism is probably inevitable over the long term because I don't think pre-modern agricultural civilizations would ever have been exploitative enough of the natural resource base that they would have been subject to worldwide collapse in any normal timescale. So there would always be potential civilizations from which modern post-Malthusian technological civilization could have emerged. Labels: Economic History

The waist-to-hip ratio research has been done to death, but an interesting twist, Blind men prefer a low waist-to-hip ratio:

Previous studies suggest that men in Western societies are attracted to low female waist-to-hip ratios (WHR). Several explanations of this preference rely on the importance of visual input for the development of the preference, including explanations stressing the role of visual media. We report evidence showing that congenitally blind men, without previous visual experience, exhibit a preference for low female WHRs when assessing female body shapes through touch, as do their sighted counterparts. This finding shows that a preference for low WHR can develop in the complete absence of visual input and, hence, that such input is not necessary for the preference to develop. However, the strength of the preference was greater for the sighted than the blind men, suggesting that visual input might play a role in reinforcing the preference. These results have implications for debates concerning the evolutionary and developmental origins of human mate preferences, in particular, regarding the role of visual media in shaping such preferences. Full description of the research here. Labels: Evolutionary Psychology

Saturday, January 16, 2010

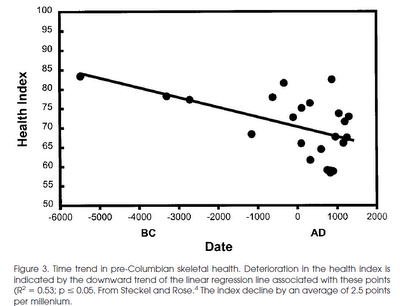

I've been interested in the transition toward agriculture, and its relationship to human health, for a while. There seem to have been two dominant paradigms in anthropology over the past century. The first is that agriculture spread because it was superior. Farmers were not as poor or ill-fed as hunter-gatherers. More recently, there has been a strong shift toward the view that on the whole the shift toward agriculture actually was associated with an increase in morbidity, and that hunter-gatherers lived lives of relative leisure. Though I lean toward the second view more than the first, it seems likely to me that the anthropological consensus, or at least the consensus communicated to the public, has shifted too far in the direction of the leisurely hunter-gatherer. No matter the exact case, I think it is important to look more at individual cases and the raw data, instead of resting on theoretical presuppositions. After all, it is possibly the case that in the pre-modern period the wealthiest populations of all would have been agriculturalists who existed in the short transient between the introduction of agriculture and the catch-up of the population to the Malthusian limit. It therefore makes absolute sense from a rational-actor model why hunter-gatherers might rapidly defect to the agricultural lifestyle in a world where mot arable land was unclaimed.

Poking around I found an interesting paper, Skeletal health in the Western Hemisphere from 4000 B.C. to the present (ungated version), which looks at health among native populations before the arrival of Europeans. The New World is probably a good target for case studies because even in 1492 it seems that across much of North and South America there was still a great deal of inter-penetration between agricultural and non-agricultural lifestyles, which some populations practicing both in a facultative manner. Here are the two most interesting figures:   There's a lot of variance, but the trendline seems clear. Additionally, the authors note that after 1492 the native skeletons are often more robust and indicate more health than before, despite the introduction of Eurasia pathogens. I think the reasons for this are two-fold. First, short-term mortality can result in a medium-term decline in morbidity, as the population drops far below the Malthusian limit. Second, the authors note that plains nomads are overrepresented in the post-1492 samples. These populations with horses had radically transformed their lifestyles, and were arguably much more affluent after the transference of the European toolkit, as they rode along the transient between two Malthusian limits. Labels: Agriculture, anthropology

Daniel Larison has a post up where he criticizes a David Brooks column. Here's what Larison observes (Brooks' quote within):

David Brooks is right that culture and habits matter, but this one line rang false:There is the influence of the voodoo religion, which spreads the message that life is capricious and planning futile. There is of course a strong tendency to look at the religion a society adheres to and compare that to the society itself. Then one simply maps a set of traits from the religion to the society to establish a causal relationship. Most of the relationships abduced in this manner are often very plausible at first blush. But their track record is weak. For example, there is usually a tacit assumption that "higher religion," formally institutionalized, and often codified in a text, is associated with, and fosters, "higher civilization." Presumably according to Max Weber et al. a religion with the intellectual subtly of Calvinism is far more likely to give rise to a robust capitalist order than a more primal faith which is essentially animism. I think this is an eminently plausible relation to assert. But consider the one society in the world today which places a primal animist tradition at the center of its national life; Japan.* How primitive a culture is that? The moral for me is that the plausibility of a relation is often highly conditioned on focusing on a narrow set of facts. But if you expand the sample space and look at counter-examples,** or attempt to generate inferences and see how the empirical data fit those inferences, it all becomes very murky. Note: The type of people who convert to Christianity in South Korea and Buddhism in the United States are in many ways similar; forward elements which are more urbane and educated than the population at large. By contrast, in South Korea Buddhism is a reservoir of a more traditionalist and conservative sentiment, as is Christianity in the United States. These facts likely have little to do with the nature of these religions, and more to do with contingent historical circumstances. * In West African societies Vodun is a common system of belief, as Voodoo is in Haiti. But the difference between Shinto and Vodun/Voodoo is that the Japanese elite accepts Shinto as a robust part of the national identity. By contrast West African nations have generally attempted to suppress Vodun. If one presumed that acceptance of animism goes along with being primitive, then one predicts that Japan is more primitive than Haiti or West Africa, where the elites reject their indigenous animistic tradition. ** Most people are very ignorant of cross-cultural data, especially when it comes to religion. Labels: Religion

Friday, January 15, 2010

I recently read The Fires of Vesuvius: Pompeii Lost and Found & Roman Passions: A History of Pleasure in Imperial Rome. Got me in the mind of thinking more about the history of city life, and what it was like in the past, and how it compares to my own experiences. In Blessed Among Nations: How the World Made America Eric Rauchway asserts that the American mania for public health in cities was driven in large part by fears of contagion brought by new immigrants, and that the United States by 1900 was the first society where urban life expectancies began to surpass rural ones. The reality of attrition may be one reason why ancient cities such as Rome could shrink by an order of magnitude in population over a century when rents (circa 500 to 600) contingent upon a particular political order disappeared.* Consider the famous Roman baths. These were essential Third Places, where individuals of all classes and both sexes might mingle.** But remember that the baths were not chlorinated, and the flushing of dirty water was primitive enough that classical literature makes several references to floating feces. In regards to elimination it seems that in most residences the latrine was located near the kitchen, in part so that leavings from the dinner preparation (at least in more affluent houses, the lower classes may mostly have eaten the equivalent of "street food") could be discarded easily. And, there was a notable shortage of latrines in urban apartment buildings on a per capita basis, with one to a complex being standard.

This got me to thinking of my visit to Bangladesh as a child in the late 1980s. The lack of appropriate sewage disposal facilities in Dhaka was very salient to me at the time, and only somewhat less so when I visited in 2004. In 1989 I arrived for Christmas break, so the weather was relatively mild and dry. Nevertheless the filth in the city of Dhaka was such as that I was constantly plagued by respiratory problems. There was though an interlude of a week in a rural area (where a part of my family is from). It was relatively primitive; electrification had not swept the region yet. Nevertheless the cleanliness of the countryside in relation to the city was very striking, and my respiratory ailments quickly cleared up. This is not to say that I did not notice the smell of cow dung (which I preferred to the constant aroma of human ordure in Dhaka), and we did boil our water as a precautionary measure. And aside from one house built by my grandfather there were no modern latrines in the village (you've seen Slumdog Millionaire?). Nevertheless the effect on my health was palpable in the rural vs. urban contrast. This may be reminiscent of the zero-sum nature of pre-modern life. One the one hand ancient cities grew large because of the vicissitudes of subsistence cultivation. Rome's lower class population originated in large part from dispossessed yeoman farmers. Escape to the city might have been a way to avoid starvation as a freeholder whom the gods had not smiled upon. And for the elites Rome was a magnet which drew them because of its centrality as the locus of public life, status and prestige. For intellectuals and the aspiring urbane the city was the only game in town. But it is no surprise that the well-to-do focused so much on their gardens and urban parks, as they were private retreats from the repulsive squalor of ancient city life which wealth could not fully insulate one from. Today young couples, at least in the United States, often move out of the city. The main reason is cost of living, and the option of having an affordable home with more space for children, and better schools. It is a testament to modern civilization that health is a relatively minor concern when making this sort of decision (in fact, New Yorkers have a high life expectancy, though that may not be due to urban life per se). There are many resemblances between the ancient and modern city in terms of the roles they play within a society, but at least we have moved past some of the more harsh trade-offs. Note: In my trip in 2004 it seemed to me that Dhaka had developed a bit in the interlude in terms of air quality and sewage disposal, though I still had respiratory issues, and it was still filthy by Western standards. But the roads were better, and we visited several houses of acquaintances who lived in the rural areas just outside the city, though they worked in the city. Again the difference in air quality was very notable, and I could understand why they would subject themselves to the commute so as to spare their families. Additionally, I might add that I also perceived a wide variance in air quality within Dhaka itself, with newer areas of the city built up due to urban sprawl being less foul than the older core. * One possibility would be relocation to other cities, or the transformation of long term city-dwellers into subsistence farmers who settled outside the city limits. I am skeptical of the latter option in a world at its Malthusian limit, and where subsistence farming seems likely to have had little margin whereby one could learn the skills necessary as an adult. ** By the Imperial period most baths were sex segregated spatially or temporally, but not all.

Thursday, January 14, 2010

There's a lot of media buzz right now about a new report in JAMA on the empirical trends on prevalence of obesity in the United States. You can read the whole paper here (too many tables, not enough graphs). Interestingly, like George W. Bush it seems that Harry Reid is prejudiced against the overweight. The data in the paper above strongly implies that anti-fat bigotry is going to have disparate impact.

Labels: Obesity

Wednesday, January 13, 2010

Here's the link to the new paper in Naure on the evolution of the human & chimp Y chromosome, Chimpanzee and human Y chromosomes are remarkably divergent in structure and gene content. ScienceDaily and The New York Times have summaries up. Wonder if there'll be future editions of Adam's Curse....

Labels: Genetics

Tuesday, January 12, 2010

Frequent Cognitive Activity Compensates for Education Differences in Episodic Memory:

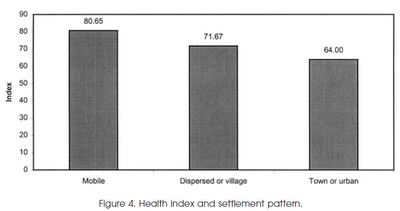

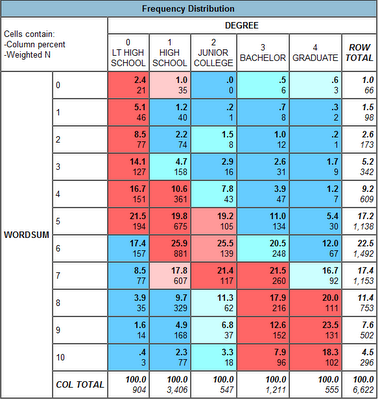

Results: The two cognitive measures were regressed on education, cognitive activity frequency, and their interaction, while controlling for the covariates. Education and cognitive activity were significantly correlated with both cognitive abilities. The interaction of education and cognitive activity was significant for episodic memory but not for executive functioning. Here's some survey data on books from a few years ago, One in Four Read No Books Last Year: One in four adults read no books at all in the past year, according to an Associated Press-Ipsos poll released Tuesday. Of those who did read, women and older people were most avid, and religious works and popular fiction were the top choices. Here's the GSS when it comes to comparing educational attainment & WORDSUM, limiting the sample to 1998 and after:  I assume readers of this weblog are more familiar with the dull who have educational qualifications, but it is important to note that there are a non-trivial number of brights who for whatever reason didn't go on to obtain higher education (though this is more true of older age cohorts in the United States). Labels: Psychology

Sunday, January 10, 2010

This week David Brooks has a column up on the messianic variant of the "Mighty Whitey" motif. Steve points out that this is a relatively old genre, with roots back to the Victorian period. And, it also has basis in fact. Consider the White Rajahs of Sarawak. But Mighty Whitey highlights something more general, and much older.

In some cases Mighty Whitey is not particularly mysterious. The Europeans had modest organizational and technological advantages over the indigenes of the New World, but their biggest advantage was biological. This biological advantage easily became an ideological one, in the pre-modern world disease had a strongly supernatural cast and served as an indicator of the gods' favor. The emergence of the White Rajahs of Sarawak relies on more straightforward social and historical processes; the period around 1900 was arguably the one where there was maximal technological and organizational disjunction between Europe and the rest of the world. Non-European populations theoretically had access to European technology and organizational techniques (e.g., the Japanese), but it is also likely that a European was particularly well-placed to leverage the wider network of information and materiel which had spread across the world during the high tide of the imperial & colonial moment. The reason for an individual "going native" can be conceived of in a relative-status sense; being a king among the barbarians sounds much more fun than being an obscure civilized burgher. In the process these individuals serve as the vectors for cultural diffusion of ideas. One can also model this as a tension between within-group vs. between-group dynamics; traitors can often secure for themselves great wealth and status, at the expense of the group from which they defected. From their own perspective a traitor is just engaging in self-interested cultural arbitrage. But as I said above the motif is an old one, and its factual basis is ancient and likely general. Consider Samo: Samo (died 658) was a Frankish merchant from the "Senonian country" (Senonago), probably modern Sens, France. He was the first ruler of the Slavs (623-658) whose name is known, and established one of the earliest Slav states, a supra-tribal union usually called (King) Samo's empire, realm, kingdom, or tribal union.  If the accounts are correct the historical Samo traded a life as a merchant in Post-Roman Francia for that of the paramount chief of a federation of pagan Slavs in a fully re-barbarized margin of the post-Roman world. Though a product of Christian post-Roman Western European civilization Samo ended his life as a pagan warlord. He is notable because of the critical role he reputedly played in the ethnogenesis of the Wends, a group of Slavs who were forcibly Christianized and Germanized in the 13th century, five to six hundred years after Samo's time. If the accounts are correct the historical Samo traded a life as a merchant in Post-Roman Francia for that of the paramount chief of a federation of pagan Slavs in a fully re-barbarized margin of the post-Roman world. Though a product of Christian post-Roman Western European civilization Samo ended his life as a pagan warlord. He is notable because of the critical role he reputedly played in the ethnogenesis of the Wends, a group of Slavs who were forcibly Christianized and Germanized in the 13th century, five to six hundred years after Samo's time.Over the long arc of history Europeans became a Christian meta-ethnicity, influenced by the long shadow of Romanitas. After the fall of Rome this often occurred through a mix of processes, but generally it involved the conversion of the elite or the king, and then a slow gradual shift among the populace. What became Christendom was the end product of the process. But this was not the only process. On a specific individual level there were likely many Samos, who profited by tacking against the winds of history in their own lives (though the post-Roman nations which arose in Gaul, Iberia and Italy generally assimilated the German barbarians who settled amongst them, in the first few generations there were many instances of local Roman nobility "going barbarian" for personal advantage, with several cultural traits such as trousers persisting as the common heritage of both Germans and Romans). Samo's life may also illustrate the power of marginal advantages in the pre-modern world. It is not as if he brought significant technological advantages to the Slavs. Additionally, this was a world where median differences in wealth between societies which were the richest and poorest was on the order of 25%. Everyone was poor. And as a merchant he was likely somewhat worldly, but he likely lacked connections to the power elites of the post-Roman world. But he was a Christian, at least by origin, and was fluent in post-Roman civilization. His cultural background included within its memory the concept of powerful autocrats tying together disparate peoples. Concepts which might have seemed obvious to anyone who matured within post-Roman civilization might have been alien and novel to those who were purely barbarian, as the Slavs were (i.e., the Slavs were not barbarized after the fall of Rome, they were the barbarians who had never known Roman rule or influence). To the Slavic elite Samo may have been both worldly and organizationally confident. Or, more prosaically he might have been perceived as a compromise candidate who did not put at risk the relative status and position of any of the contemporary elite lineages. After all, it recorded that Samo's sons did not inherit his power. If you conceive of cultures as phenomena of interest which are subject to dynamics, it is important to fix upon the individual elements which allow for them to evolve over time. The role of indigenous cultural vanguards, what might be colloquially termed "sell outs," is well known. But outsiders who "go native," and so transform the natives profoundly, has been less well emphasized and clarified, in part because the outsiders become a seamless part of the natives' heritage, and also because the outsiders may only delay the inevitable. But in the end, only death is inevitable. Labels: History

Saturday, January 09, 2010

Localizing recent positive selection in humans using multiple statistics

posted by

p-ter @ 1/09/2010 07:41:00 AM

Online this week in Science, a group presents a method for identifying genes under positive selection in humans, and gives some examples. I have somewhat mixed feelings about this paper, for reasons I'll get to, but here's their basic idea:

Readers of this site will likely be familiar with genome-wide scans for loci under positive selection in humans (see, eg., the links in this post). In such a scan, one decides on a statistic that measured some aspect of the data that should be different between selected loci and neutral loci--for example, extreme allele frequency differences between populations, or long haplotypes at high frequency--and calculates this statistic across the genome. One then decides on some threshold for deciding a locus is "interesting", and looks at those loci for patterns--are there genes involved in particular phenotypes among those loci? Or protein-coding changes? In this paper, the authors note that many of these statistics are measuring different aspects of the data, such that combining them should increase power to distinguish "interesting" loci from non-"interesting" loci. That is, if there's an allele at 90% frequency in Europeans and 5% frequency in Asians, that's interesting, but if that allele is surrounded by extensive haplotype structure in one of those populations, that's even more interesting. The way they combine statistics is pretty straightforward--they essentially just multiply together empirical p-values from different tests as if they were independent. I wouldn't believe the precise probabilities that come out of this procedure (for one, the statistics aren't really fully independent), but it seems to work--in both simulations of new mutations that arise and are immediately under selection and in examples of selection signals where the causal variant is known (Figures 1-3)--for ranking SNPs in order of probability of being the causal SNP underlying a selection signal. With this, the authors have a systematic approach for localizing polymorphisms that have experienced recent selection. It's necessarily somewhat heuristic, sure, but it does the job. They then want to apply this procedure to gain novel insight into recent human evolution. This is sort of the crux of the matter--does this new method actually give us new biological insight? The novel biology presented consists of a few examples of selection signals where they now think they've identified a plausible mechanism for the selection--a protein-coding change in PCDH15, and regulatory changes near PAWR and USF1 (their Figure 4). On reflection, however, these examples aren't new. Consider PCDH15--this gene was mentioned in a previous paper by the same group, where they called a protein-coding change in the gene one of the 22 strongest candidates for selection in humans (Table 1 here, and main text). It's unclear what is gained with the new method (except perhaps to confirm their previous result?). Or consider the regulatory changes near PAWR and USF1. The authors use available gene expression data to show that SNPs near these genes influence gene expression, and that the signals for selection and the signals for association with gene expression overlap. Early last year, a paper examined in detail the overlap between signals of this sort, and indeed, both of these genes are mentioned as examples where this overlap is observed. So using different methods, a different group published the same conclusion about these genes a year ago. Again, it's unclear what one gains with this new method. In general, then, this paper has interesting ideas, but puzzlingly fails to really take advantage of them [1]. That said, they've taken some preliminary steps down a path that is very likely to yield interesting results in the future. ----- [1] I wonder if I'm being too harsh on this paper just because it was published in a "big-name" journal. If this were published in Genetics, for example, I certainly wouldn't be opining about whether or not it contains any novel biology. ----- Citation: Grossman et al. (2010) A Composite of Multiple Signals Distinguishes Causal Variants in Regions of Positive Selection. Science. DOI: 10.1126/science.1183863 Labels: Population genetics

Thursday, January 07, 2010

Lots of talk about how the "underwear bomber" was from a wealthy and cosmopolitan background in the media. Like the poverty = crime meme, the poverty & backwardness = terrorism meme is still floating around, though the evidence of the past decade of the prominence of affluent and well-educated individuals in international terrorist networks is eroding that expectation's dominance a great deal. One thing though that I noted was that many Nigerians are claiming that Umar Farouk Abdulmutallab was radicalized in Londonistan. Though Nigeria has had a great deal of Muslim-Christian violence over the past few decades, the main reasons can be easily attributed to local dynamics (internal migration, Hausa chauvinism, etc.). I think there is a difference between a movement such as ETA, which has clear and distinct aims, and more quixotic groups which were basically nihilistic in their outcomes (if not aims), such as the Red Army Faction. The Salafist international terrorism movement resembles the latter more than the former in terms of the practical outcomes of their actions.

The importance of diaspora communities and mobile cosmopolitans in international terrorism makes me wonder as to the relevance of Peter Turchin's thesis that civilizational boundaries are critical in shaping between-group dynamics. Among "right thinking people" the Clash of Civilizations narrative is dismissed, but there are many non-right thinking people for whom it is alive and essential, even if they wouldn't put that particular name to it (i.e., usually it is for them one of civilization vs. barbarism, Christianity vs. heathenism, the Abode of Islam vs. the Abode of War). And the civilizational chasm may be most alive precisely for those people who live on the boundaries between the two. The popularity of nationalist political movements in areas of Europe with large immigrant populations attests to the generality of this insight. Update: Just noticed that Haroon Moghal makes similar observations: The first point: radicalism is most likely to emerge from zones of overlap. By this I mean the people, places or other contexts where Western and Islamic perspectives come together in negative contrast. Say, the African Muslim student who travels to Europe to study, finding himself alienated by the lifestyle around him, the hateful comments about Islam in the public discourse and the undeniable pain of war and poverty in so many Muslim lands. Or the British Muslim who's angry at his government's foreign policy and tired of not being considered part of his country. (No wonder the pining for future Caliphates: it's somewhere one's passport might imply belonging.) (via JohnPI) Addendum: Just a note, the intersection between cultures/civilizations can play out synthetically or through confrontation. The two Jewish rebellions against Roman rule were ultimately futile, and forced a reconstruction of Jewish identity into a more pacific form. On the other hand, one could model Christianity as a synthesis of Jewish and Greco-Roman culture which was eminently successful in its own right. Labels: culture

Wednesday, January 06, 2010

Last month I pointed to a paper on Chinese population structure, Genomic Dissection of Population Substructure of Han Chinese and Its Implication in Association Studies. One to note was that the average FST differentiation Han populations was on the order of 0.002, while those differentiating Europeans was on the order of 0.009. Below are the various Han population, along with Japanese. CHB = Beijing, while CHD = Denver. The Denver sample is probably biased toward Cantonese and Fujianese, since most American Chinese are from these two groups. As a point of reference, here are South Asian genetic distances.

In Our Time has several episodes up on The Royal Society. You can listen online at the link, but I'd recommend that you just subscribe on iTunes to IOT.

Labels: History